Conversion rate optimization can be very complex, involving many factors that must be carefully considered and balanced. When it comes to creating effective marketing assets that drive conversion, there’s no single tactic that works every time. This is because every organization is unique, with its goals, target audience, and market conditions. What works for one business likely won’t work for another, even within the same industry, due to differences in brand identity, customer behavior, and competitive landscape. Most marketers are aware of this and can generate effective campaigns based on a combination of intuition and knowledge about their audience. They rely on their experience and understanding of market trends to craft resonating messages. However, intuition doesn’t show whether your marketing is working. It doesn’t help you identify potential mistakes, problems, and bottlenecks hindering your success. It doesn’t allow you to fine-tune your content to be as effective as possible, ensuring that every element is optimized for maximum impact. That’s where A/B testing comes in, providing a data-driven approach to understanding what truly works and what doesn’t, allowing marketers to make informed decisions and continuously improve their strategies.

What is A/B Testing?

A/B testing involves strategically creating two or more versions of a marketing asset, such as a webpage, email, or advertisement, to determine which version performs most effectively in achieving the desired outcome. This process is crucial for understanding consumer preferences and optimizing marketing strategies. Each asset version is carefully crafted with only a single variable altered, such as a headline, an image, the color of a button, or the placement of a design element. This meticulous approach ensures that any differences in performance can be attributed to the specific change made, providing clear insights into what resonates best with the audience. An effective A/B test begins with a well-defined and clear goal, such as increasing click-through rates, boosting conversion rates, or enhancing user engagement. This goal serves as the guiding principle for the test, ensuring that the results are aligned with the overall marketing objectives and providing a focused framework for evaluating the success of each version.

How to Conduct an Effective A/B Test

Here is an example that will likely resonate with most marketers. You might suspect that increasing the size of a call-to-action button in an email newsletter will improve conversions. You would then determine a sample size and send one-half of the sample group the newsletter with the original button and the other half the large-button version. After measuring the results, you can decide whether to run another A/B test or send the newsletter to the rest of your mailing list with the "winning" version. You can see here that only one thing was tested...the call-to-action. This is important so you only have one different element, and you can see that one single change drives the results.

An A/B test takes place over an extended period rather than targeting a specific sample size for web pages and other content that doesn't have a finite audience. This approach is necessary because, unlike email campaigns or targeted advertisements, web pages are accessible to a broad and often unpredictable audience. Therefore, it is crucial to allow the test to run long enough to gather a substantial amount of data that can be considered statistically significant. The duration of the test can vary significantly depending on the website's traffic volume, which is a critical factor in determining how quickly meaningful insights can be drawn. For instance, a high-traffic site might reach the necessary data threshold in a matter of days, while a site with less frequent visitors might require several weeks to achieve the same level of statistical confidence. By ensuring the test runs for an adequate period, marketers can confidently analyze the results, knowing they reflect genuine user behavior and preferences, ultimately leading to more informed decisions about optimizing web content for better performance.

If you want to add another layer of insight to your testing data, you may consider providing your audience with exit or post-test surveys. These surveys can be a powerful tool for understanding the motivations and experiences of your users. Focus on your core goal by crafting questions about the reasons behind their actions. Ask them why they took a particular course of action, what influenced their decision-making process, and how they felt about the overall experience. This will give you actual user data into why someone took a specific path on your site, highlighting things they liked, aspects they found confusing, and areas that may need to be improved. Such feedback can be invaluable, especially in a website redesign project, as it provides direct insights from end-users interacting with your site.

Additionally, it’s also important to note that you don’t need to conduct an A/B test manually. Most digital marketing platforms, such as HubSpot, include A/B testing functionality, making it easier to implement and analyze tests. You can A/B test various elements, including call-to-action buttons, landing pages, web pages, emails, and more, allowing you to optimize different aspects of your marketing strategy efficiently.

What to Do After an A/B Test

Once you have gathered enough data for your A/B test results and figured out which variation performs best, your next step is determining if the test is enough to justify changing things. Paint as complete a picture as possible, leveraging multiple metrics to ensure your test is accurate. It’s important to understand that no metric exists in a vacuum. Let’s say, for example, you are A/B testing two different email subject lines. While one subject line might have a better open rate, the second one might result in more conversions for the users who open it. So try to avoid getting caught up in the small details of the test. Finally, understand that a single A/B test isn’t enough to optimize your conversion process. You should always look forward, thinking about what you can test next. Remember that an A/B test should have an end. You don't need to A/B test forever. Get the results you need, pick your "winning" version, and you can move on to your next project.

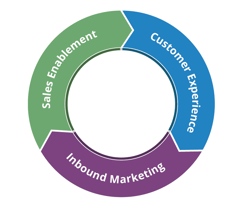

Need help getting started? We invite you to schedule a complimentary inbound marketing consultation, and we can discuss areas you may want to consider A/B testing to get immediate and actionable data on how to optimize your conversion.

.png?width=80&height=80&name=diamond-badge-color%20(1).png)

.png?width=250&name=diamond-badge-color%20(1).png)